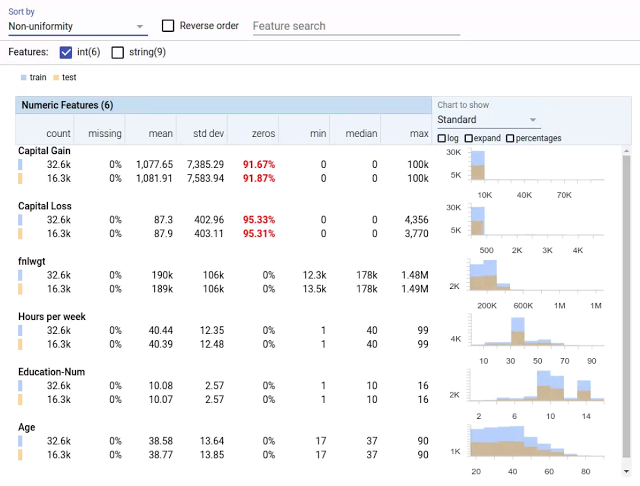

Google released a new Open Source Tool named "Facets" in order to visualize data sets for machine learning. (https://research.googleblog.com/2017/07/facets-open-source-visualization-tool.html)

In this way, users can quickly understand and analyze the distribution of the data sets. The tool is created in Polymer and Rypescript and it supports Jupyter embedding in notebooks and web pages.

We at aiso-lab are currently investigating whether the technology is also available for visualizing some of our products.

Source: Google

The Network Security Lab team at the University of Washington has discovered a reputed weakness of deep neural networks (https://arxiv.org/pdf/1703.06857v1.pdf). DNN seem to have problems with identifying negative images.

If the network was trained to recognize three images in white, it was not trained to recognize them in black. The researchers perceive here a weakness in the ability of the DNN to generalize. A simple solution to this problem would be to create the negative examples for the automated training.

However, the question that arises is how far a generalization of the network is desired at all. For color images, it is important that the network does not ignore the color information. The network should have a general view i.e. the red color, but still be able to distinguish red and green. This shows the major role that the careful selection of the training set and the learning function play in order to train the net correctly to your own needs.

In his latest paper, Yoshua Bengio—one of the world's leading AI professors at the Université de Montréal—established a link between deep learning and the concept of consciousness (https://arxiv.org/pdf/1709.08568.pdf).

The idea of a “Consciousness Prior” is inspired by the phenomenon of consciousness defined as the formation of a low-dimensional combination of—a few—concepts constituting a conscious thought, i.e., consciousness manifests itsel as awareness at a particular time or instant.”

The notion is that the interim results generated by a Recurrent Neural Network (RNN) can be used to explain the past and to plan the future. The system does not act on the basis of input signals, such as images or texts, but rather controls the "consciousness" established by the information abstracted from input signals.

Consciousness Prior could be used to translate information contained within trained neural networks back into natural language or into classical AI procedures with rules and facts. An implementation of this concept is not presented in the document, but Bengio proposes to integrate the approach into reinforcement learning systems.

Bengio’s ideas may very well lead the way to new frontiers in artificial intelligence. Time will tell whether his proposal is a revolutionary idea or just a "visionary” mind game.

New Imagenet results are published. A team from Beijing provides a new top-5 classification record with an error rate of 2.25%. In the last two years, big companies such as Google, Microsoft and Facebook have not participated in this challenge.

In a new release of Google titled "Revisiting Unreasonable Effectiveness of Data in Deep Learning Era," Google describes what are the results of training a neural network on an image set of 300x times more images than in ImageNet.

The conclusion was that even with an increase of 3 million to 300 million training examples, the performance of the network linearly scales. Even after 300 million images, no flattening of the learning curve was observed.

To that end, the trained network placed a record in the COCO object detection benchmark. They came to the result that only the number of training data was increased, there were no improvements to the model itself.

This is an impressive demonstration of the importance of BigData in the context of deep learning. The best models can only be developed by companies that have the expertise to store and efficiently process enormous amounts of data.

aiso-lab is at your disposal as a competent partner for all challenges in the field of software and hardware.

Earlier this year, Google hosted a competition on Kaggle for YouTube video classification. Google provided 7 million videos with a total of 450,000 hours, which would be classified in 4716 categories. The third-placed team, a group of researchers from Tsinghua University and Baidu, have recently published their approach.

With a 7-layer deep LSTM architecture, an accuracy of 82.75% is achieved according to the used Global Average Precision metric.

The architecture of the temporal residual CNN used is as follows:

Source: Tsinghua University, 2017

Source: Tsinghua University, 2017

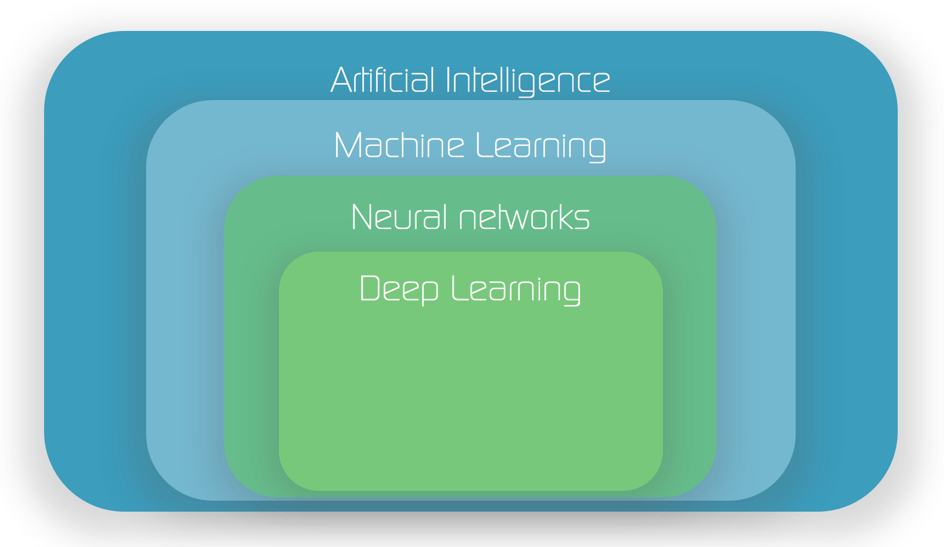

Artificial Intelligence, Machine Learning, Neural Networks and Deep Learning are currently very popular buzzwords and they are often used in the same context. However, there are clear differences between them. Thus, I would like to briefly refer to their definitions and associations.

Artificial Intelligence

The term Artificial Intelligence was coined in 1956 by Marvin Minsky, John McCarthy and other scientists during a workshop at Dartmouth College.

AI serves as an umbrella for a machine technology in order to provide intelligence and it covers a wide range of methods, logics, procedures and algorithms. This is a very indefinite description though since even the term "intelligence" is generally difficult to define.

Deep Learning

As a rule, these are computer programs which imitate certain human "cognitive abilities", e.g. the learning of connections or specific problem solutions. The AI research focus is on the neuro-linguistic programming (NLP), learning, knowledge processing and planning. In addition to computer science and mathematics, neurosciences, sociology, philosophy, communication science and linguistics are also involved in AI research.

Machine learning

Machine learning is a sub-discipline of artificial intelligence and refers to statistical techniques by which machines perform on the basis of learned interrelationships. On the basis of data gathered or collected, algorithms (a sequence of defined steps to achieve a goal) are independently "learned" by computers without being programmed by a human being. A variety of algorithms and methods are e.g. Support Vector Machines, Decision Tree, Random Forrest, Logistic Regression, Bayesian Networks, and-neural networks.

Neural Networks

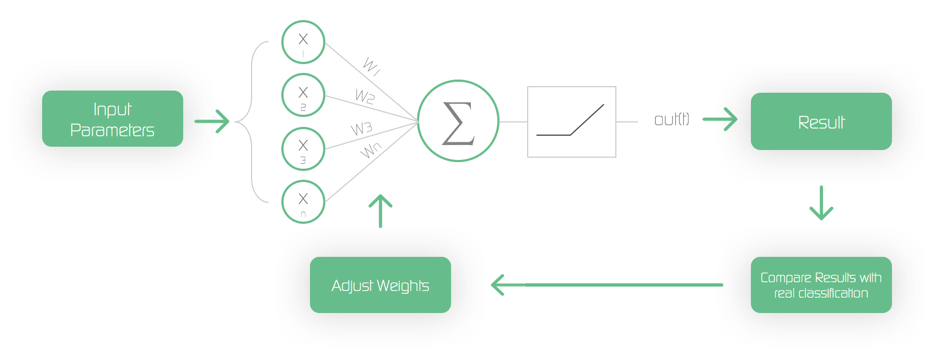

Neural networks are computer models for machine learning, which are based on the structure and functioning of the biological brain. An artificial neuron processes a plurality of input signals, and in turn, when the sum of the input signals exceeds a certain threshold value, it sends signals to further adjacent neurons.

In this case, a single neuron can only have very simple computations like classifications. If, however, a large number of neurons are connected in different architectures, more and more complex situations can be learned and corresponding operations can then be carried out.

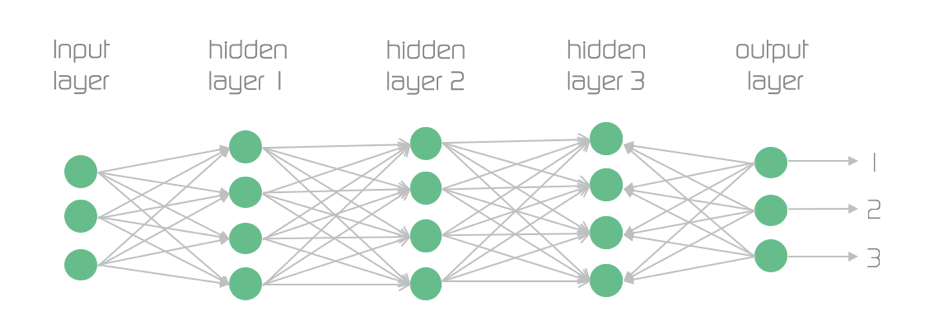

Simple neural networks include an input layer to which the input signals (e.g., image information) are applied. An output layer that contains neurons responsible for different results and a hidden layer through which input and output layers are linked. If a network architecture contains more than one hidden layer then one speaks about Deep-Neural-Networks or...

Deep-Learning

Deep learning is the area of machine learning that will change our lives most over the next few years.

This is a neural network that has more than one hidden layer. Current architectures used in practice, comprise up to one hundred layers with tens of thousands of neurons. These networks can be used to solve very complex tasks, e.g. Image recognition, speech recognition, machine translation and, and, and…

In the following posts of this blog I will add more details regarding the specific topologies and their application areas...stay tuned.

aiso-lab (Artificial Intelligence Solutions) develops AI solutions for companies - from consulting through pilot projects to production

We are launching Germany's first complete offer for the development of innovative applications based on artificial intelligence. aiso-lab is runned by Jörg Bienert, Michael Hummel and a team of experienced data scientists, consultants and project managers based in Cologne and Berlin.

The founders have already established in 2011 the ParStream one of the first German Big-Data start ups in Cologne and the Silicon Valley, which were taken over in 2015 by Cisco.

"With aiso-lab we want to bring these success stories and our experience in implementing innovative projects and products," says Jörg Bienert, CEO of AISO-Lab. "Artificial intelligence will revolutionize our work and our lives in many areas." We are going through a similar threshold like this of the Internet breakthrough in early 2000. However, there are many companies in Germany still unprepared for this upheaval. "

Our offer range from introductory presentations and training courses, through the implementation of detailed evaluation workshops, pilot implementation to complete product development and the provision of the necessary infrastructure, including a dedicated hardware appliance.

"There is often a gap between current requirements, new ideas and opportunities offered by the new technologies in the field of AI, " emphasizes Bienert. "We see ourselves as bridge builders to fill this gap and work closely with our customers in order to generate creative ideas and implement innovative products."