The term Artificial Intelligence (short-term A.I.) refers to the investigation and the design of the intelligent behavior of the machines. The modern form of the field was developed in the fifties and at this early stage, while a considerable optimism was noticed also in other technological areas, its promise did not remain sustainable during the following decades. Fundamental problems questioning the Artificial Intelligence meaning and its perception were distinguished. However, concrete successes in the credible intelligent systems design were achieved too.

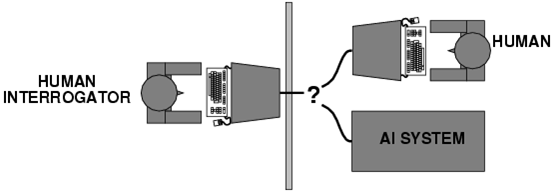

A characteristic of this new skepticism is the scientific debate which later on developed the question of how the machine intelligence can be demonstrated at all. In the early 1950s, Alan Turing proposed the Turing test which was named after him. In this test, a person communicates via a telegraph with a counterpart that does not know whether it is a computer or a human being. If the interlocutor is a computer and the test person nevertheless identifies it as a human being, then this effect according to Turing would be a proof of the machine intelligence.

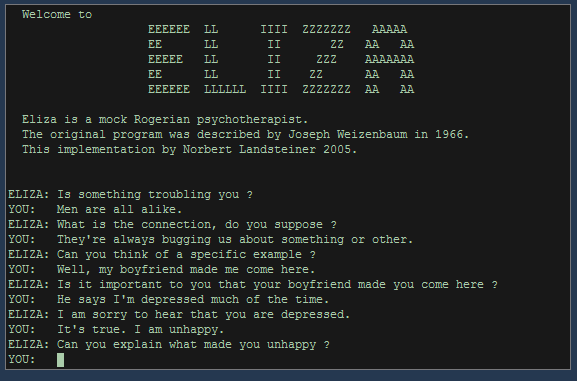

The method of this test was criticized several times in the debate. Consequently, in the 1960s Joseph Weizenbaum created the ELIZA program which according to today’s standards was quite simply structured and could simulate a partner conversation in various roles. ELIZA – especially in the role as a psychotherapist – repeatedly managed to “deceive” the human partner conversation with regard to Weizenbaum’s own bemusement. Later on, the pseudo-therapeutic context also suggested that this was at least partly a projection on the human side: the individual tests were interpreted more in the ELIZA’s observations than were actually meant to be read.

There were also objections from a philosophical point of view: in 1980 John Searle designed the Chinese room argument. In this experiment, a person who had no knowledge of the Chinese language would take part. The individual would be in the context of a Turing test within a room equipped with all imaginable books and resources in Chinese language and culture. Another individual would then ask the test subject questions in Chinese and receive answers in Chinese. Searle’s argument is that people in the Chinese room could read and answer questions without “understanding” a word in Chinese. Thus, we can notice here the same situation like a computer that receives and responds to the human user (in Chinese or not).

The machine does not “understand” what is going on, it only works on the basis of syntactic rules without an insight into its content meaning. Of course, against Searle’s thought experiment, linguistic and cynological objections can be asked. However, here it is raised a fundamental question: is intelligence and communication pure symbol processing on a syntactic basis or is there something of a deeper semantic level of content and meaning?

Before such fundamental problems were observed, research and development in the field of Artificial Intelligence began to be divided into two main strands: the background of the Artificial General Intelligence field, that is, the design of machines – which can assume all conceivable tasks with intelligent methods. But the utmost is the strong AI, which strives for the exact reproduction of human intelligence on a machine basis. The decisive factor here is that if such a replica would be equivalent to the model, the simulation would then be identical with the original.

The field of the narrow A.I. has been successful for a long time, as well as the weak A.I. or applied A.I.

Here, machine intelligence is only considered in restricted and specialized areas. Examples for this are:

- Problem-solving strategies

- Data-Mining

- Machine learning

- Robotics

- Speech recognition

- Closing and deciding on the basis of unsafe or blurred information

- Showing and pattern recognition

In recent decades, many of these areas have made great progress. Search engines, master data mining, blurred search strategies and mobile wizards in smartphones recognize the spoken word of their users almost flawlessly and access their search engines seamlessly. Robotics has become a part of the artificial intelligence. Assuming the founded global knowledge as the basis of intelligent thinking as well as the actions that can only come about through physical contact with reality.

The artificial intelligence has therefore travelled a long way. While mobile assistants are now communicating with human beings, the fundamental questions about intelligence and the distinction between the simulation, syntactic symbol processing, and semantic understanding are still open.

Zurück