It was a great honor for aiso-lab that our CSO Dr. Alexander Lorz gave a plenary talk at ELIV (Electronics in Vehicles) about Artificial Intelligence. Other speakers on that conference day (16.10.2018) in Baden-Baden included Prof. Shai Shalev-Shwartz, CTO Mobileye; Dr. Stefan Poledna, member of the executive board TTTech Computertechnik and Gero Schulze Isfort, CSMO Krone Commercial Vehicle.

Many thanks to VDI Wissensforum.

Source: VDI Wissensforum

Over 2,66 million people watched the interview that our CTO Gary Hilgemann gave to the ARD, Germany’s largest TV broadcaster, yesterday. During the interview, the amazing possibilities that AI has created were emphasized as well as the distinct advantages of our services and the tremendous need for investments in development and research in Germany.

RAYPACK.AI and it’s sister company Rebotnix-for the edge hardware-are specializing in Visual Computing and provides customized highly-scalable AI software solutions that power manufacturing, quality control and other fundamental business processes on a multinational level. To achieve that, we are using Rebotnix Gustav-a highly reliable hardware device- as well as other devices, which among others, makes it easy for everyone to use our Visual Analyzer platform.

Our special thanks go to the report team Munich, Fabian Mader and colleagues for their good overview of the status of AI in Germany.

Click here to see the whole report and check out our products and use cases to obtain a better comprehension of our services.

by Dr. Alexander Lorz and Ann Sophie Löhde

Ever wanted to dance like Michael Jackson or a prima ballerina, but you have two left feet? Thanks to a new AI algorithm, you can now get closer to this dream – at least on video!

See yourself:

https://www.youtube.com/watch?v=PCBTZh41Ris

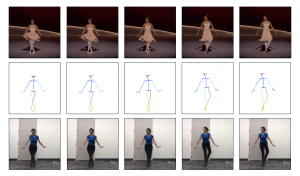

This video shows the AI algorithm developed by researchers at the University of California, Berkeley in action. Their approach is described in the paper "Everybody Dance Now".

In this source video, the posture is detected and presented as a so-called "pose stick figure". This posture is then transferred to the person in the target video.

For this process to be temporally coherent, a unique Generative Adversarial Network (GAN) is used. Also, another GAN is used to make a face as detailed and realistic as possible.

This AI algorithm opens up new possibilities for video editing. In the future, anyone can use AI algorithms to perform movements and actions on video that they are not able to do. This advancement in AI makes it almost impossible to distinguish between real and animated video. Except for the many advantages, this new technology also presents some threats. So, for example, you cannot know any more if a person on tape was doing the movement him-/herself.

If you have any questions about image processing using AI, or if you would like to know how to generate more value with your images or videos, feel free to contact us at info@aiso-lab.com.

The tech giant complements its existing offer with Edge TPU and offers world's first fully-integrated ecosystem to create AI applications

By Dr. Alexander Lorz and Ann Sophie Löhde

![]()

Two years ago, Google introduced its Tensor Processing Units (TPUs) – specialised chips for AI tasks in their data centres. Now, Google offers its cloud expertise as a new Edge TPU. The small AI chip can perform complex Machine Learning (ML) tasks on IoT devices.

The Edge TPU was developed for "inference", the part of machine learning where an algorithm performs the task it was trained to do, such as detecting a defect on a product in a production line. In contrast to that, Google's server-based TPUs are optimized for training machine-learning algorithms.

These new chips are designed to help companies automate tasks such as quality control in factories. For this type of application, an edge device has some advantages over using hardware that has to send data for analysis – requiring a high-speed and stable internet connection. Inference on an edge device is generally safer, more reliable, and delivers faster results. That’s the sales letter, at least.

Of course, Google is not the only company that develops chips for this type of edge application. However, unlike its competitors, Google offers the entire AI stack. Customers can save their data in the Google Cloud, train AI algorithms on TPUs; and then run the trained AI algorithms on the new Edge TPUs. And most likely, customers will also create their machine learning software with TensorFlow - the coding framework developed and operated by Google.

This kind of vertical integration provides tremendous benefits for Google and its customers. On the one hand, Google ensures a perfect connection between the different systems, on the other hand, the customer can program his AI in the most efficient way – using only one platform.

Google Cloud’s Vice President for IoT, Injong Rhee, said: „Edge TPUs are an addition to our cloud TPUs to accelerate the training of ML algorithms in the cloud and then run inference on edge. Sensors are becoming more than just pure data collectors – they can locally take smart decisions in real-time.

Interestingly enough, Google is offering these Edge TPUs as a development kit, which enables the customer to test the devices in their environment. This contradicts Google’s current policy to keep their AI hardware a secret. However, if Google wants to convince customers all over the world to apply only their integrated AI tools, they first need to allow them to test their effectiveness. Hence, offering this development kit is not just a strategy to win new customers, but also shows Google’s efforts to become the leading partner for the company’s AI development efforts.

As soon as this announced development kit is available, our experts will run some tests and provide you with feedback. For further questions, please do not hesitate to contact us at info@aiso-lab.com.

Source: Google Blogs.

Your child’s first steps, your daughters dancing performance or your son’s fantastic skateboard tricks. These are the moments you will never forget and still, the pass in the blink of an eye. Do you ever wish you would have captured these moments not only on video but in slow motion to enjoy every moment even more?

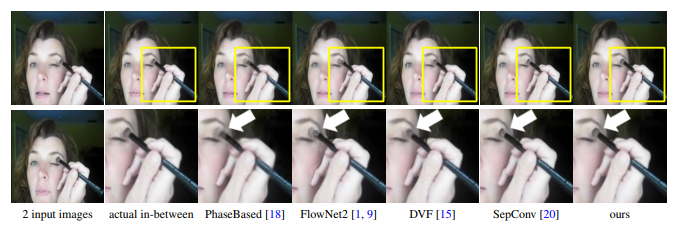

Nowadays, videos with 240fps (frame-per-second) can be recorded from a mobile phone, while videos with higher frame rates still require a high-speed camera. Also, these high frame rates need an enormous storage capacity and are not feasible in a small mobile device due to their high energy consumption. So how can you ensure to capture and relive these incredible moments often in slow motion – unpredictable and unplanned – without carrying around a sizeable high-speed camera all the time?

Scientists at the University of Massachusetts at Amherst, NVIDIA and the University of California have just found the perfect solution. They successfully conducted a research project in which they used Artificial Intelligence to convert videos with standard frame rates to super slow motion videos. To achieve this, they propose an end-to-end CNN (Convolutional Neural Network) model for frame interpolation that has an explicit sub-network for motion estimation. At first, they used a flow computation CNN to estimate the bidirectional optical flow between the two input frames. In a next step, then two flow fields are linearly fused to refine the approximated flow fields and predict soft visibility maps for interpolation.

Their scientific research has been recently published with the title "Super SloMo: High-Quality Estimation of Multiple Intermediate Frames for Video Interpolation" where they introduce all the necessary steps and experimental results.

Last but not least, speaking about videos, to gain a broader idea about this exceptional achievement, we would recommend you to watch the highly informative video that was recently presented at the renowned CVPR 2018: Computer Vision and Pattern Recognition.

On July 4 and 5, 2018, the conference "Artificial Intelligence" will take place in Berlin. In addition to significant contributions from AI managers of Siemens, Trumpf, SAP, Bosch, Continental, Otto and many others, I will give a presentation on the introduction to Deep Learning.

I look forward to an exciting exchange of experiences.

For subscribers to our Newsletter, we have a special offer for you. Don't miss it!

Kind Regards,

Jörg Bienert

Is Artificial Intelligence as dangerous as it is discussed today? While the adverse effects of AI are still openly discussed, the focus of the German AI Association is about to change this.

To clarify these points, our CEO, Jörg Bienert, board member and president of the AI Association in Germany, gave an interview with Futurezone.de and discussed in detail the role of the association and its goal but also many other aspects that play a crucial role in obtaining the benefits Artificial Intelligence has to offer. (read the interview)

On March 15, 2018, we - 24 companies and start-ups - founded the German AI Association. In the constituent meeting of the German AI Association, the members advocated the following core demands:

- to create innovation-friendly legal security in the areas of civil, tax and data law to establish Germany as an attractive Business location for the AI ecosystem and to strengthen its competitiveness in international comparison.

- to promote the human-centered and secure use of AI technologies and lead a society shaped by the social market economy into the digital age.

- to foster research, development and practical implementation of AI technologies through funding programmes, pilot projects, start-up financing and support for cooperation between start-ups, science and established companies.

- to stimulate education and science on the subject of Artificial Intelligence and awareness of society and economy about opportunities and risks.

- formation of an AI expert commission with representatives from politics, business (established companies and start-ups) and science to actively advise the Federal Government.

Details can be found in the press release at www.ki-verband.de.

We look forward to working with the (now 34) members and I am personally looking forward to working with board colleagues Rasmus Rothe (Merantix) and Fabian Behringer (ebot7) and the political advisory board under the leadership of Marcus Ewald (Thomas Jarzombek (CDU), Jens Zimmermann (SPD), Manuel Höferlin (Die Grünen) and Petra Sitte (Die Linke)).

Kind Regards,

Jörg Bienert

Two days after a great event! The first Handelsblatt KI conference - which we participated in - offered us two days of an exclusive AI experience exchange in Munich.

These days were full of excellent ideas, insightful and valuable information as well as profound insights into the world of Artificial Intelligence!

During this event, we had many interesting discussions at our booth, where we showed our current use cases for Visual Computing with AI. We were pleased with many new contacts and the meeting with some friends from our network.

Our special thanks go to the Handelsblatt, the Euroforum, the speakers, experts, colleagues and all participants.

It was a great pleasure to take part in such a successful and unique event!

Only one week to go until the Handelsblatt AI Conference in Munich on 15 and 16 March.

Join us for a two-day exclusive exchange of AI experience with Fei Yu Xu, Jürgen Schmidhuber, Frank Ruff, Bernd Heinrichs, Reiner Kraft, Norbert Gaus, Martin Hoffmann and Annika Schröder and many other top speakers.

Read the interview I gave to the Handelsblatt in advance and find here more information regarding the event.

Looking forward to seeing you in Munich!

Joerg Bienert

Source:

Source: