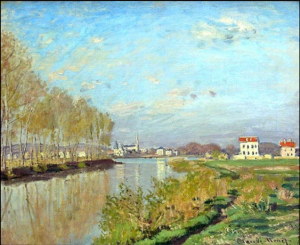

What did Claude Monet see when he painted this picture?

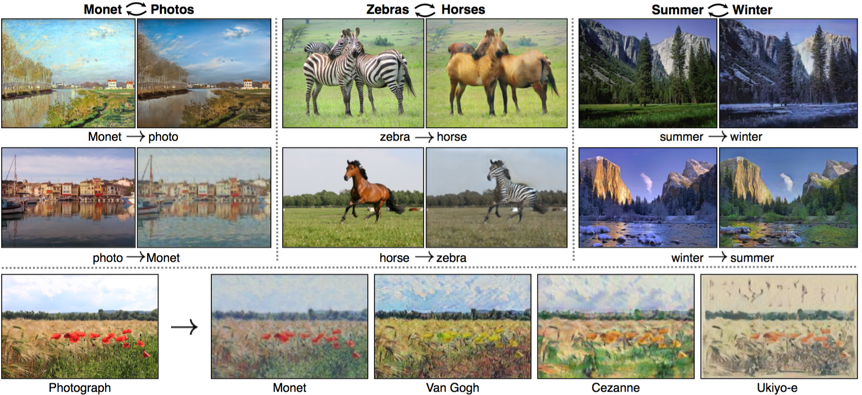

Source: Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

Source: Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

If color photography had been invented in 1873, we might have had a definitive answer to this question. But even without a color photograph, we know that it would probably show a clear blue sky and a river reflecting the sky.

The next question, of course, is what would Monet have painted if he had set up his easel in front of another landscape.

In their paper "Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks," scientists from Berkeley AI Research have developed an algorithm that can answer this question with the help of AI. Their approach is similar to how we humans would approach the task. The easiest way would be to see a Monet painting next to a color photograph of the scene he was painting. Unfortunately, we don't have this data.

Instead, we can look at Monet's paintings and landscapes. If we then look at a landscape painting, we can more or less imagine what a Monet painting would look like if he had painted that scene. This process also works in the opposite direction. When we look at a Monet painting, we can imagine what a landscape of the same scene would look like.

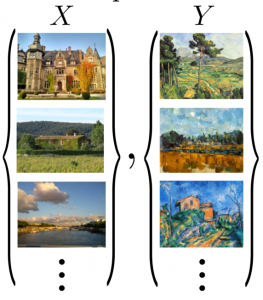

This thought experiment can be formulated as follows:

As training data, we have a data set X with landscape pictures and a data set Y with paintings. For these training data, we are looking for a mapping of landscape images to paintings and back to landscape images.

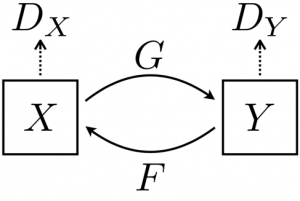

Source: Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

The neural network used to find this figure is called the Cycle-Consistent Adversarial Network.

Cycle-Consistent Adversarial Networks can not only turn landscape images into paintings by different painters but can also replace horses with zebras or change the seasons on images.

Source: Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

If you would like to know how we can help you to add value to your images, please contact us at info@aiso-lab.com.

Save the date! The leading conference for the implementation of AI in your company is taking place on March 21st and 22nd in Munich. This fantastic international experience would not possible without the contribution of Handelsblatt and the AI Summit: the significant partners of business events!

In addition to outstanding AI manager contributors of various companies, we are delighted that our CEO and President of KI-Verband Jörg Bienert will be there to discuss the responsibilities of KI Verband and its essential role in supporting Germany in catching up with new technologies.

To contribute to your participation, we have a special prize for you! Subscribe now to our Newsletter and get the exclusive opportunity to visit the event at a reduced price which amounts up to -100€. Click here to subscribe and stay tuned for our next Newsletter!

Join us for two days of discussion and inspiration and let's exchange valuable information and experiences while enhancing our network.

Get your reduced ticket today and be there! Be where innovation is!

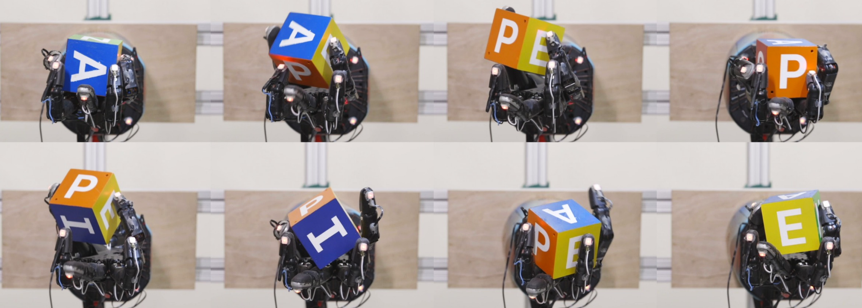

Source: Learning Dexterous In-Hand Manipulation https://arxiv.org/abs/1808.00177

While dexterous handling of objects is a daily routine for humans, this task remains a challenge for autonomous robots.

Since the human hands can perform a vast number of tasks in many different environments with great ability, creating an artificial hand is one of the most inspiring goals in Robotics.

[video width="1184" height="736" mp4="https://aiso-lab.com/wp-content/uploads/2019/01/robotdexterity.mp4" autoplay="true" preload="auto"][/video]

Researchers at OpenAI now have accomplished a big step in this direction with the help of AI (see https://arxiv.org/abs/1808.00177). The results are impressive.

<iframe width="560" height="315" src="https://www.youtube.com/embed/jwSbzNHGflM" frameborder="0" allow="autoplay; encrypted-media" allowfullscreen></iframe>

Following are four of the most important steps:

- data are gathered in randomized environments by using a large number of distributed simulations

- the next step in the control policy is chosen using an LSTM based on the position of the object and the position of the fingertips

- from three camera images, the neural network predicts the position of the object

- the results from points B) and C) are used to transfer to the real world

Surprising facts before we close:

- The final version of the model was not trained on real data

- Tactile sensation was not necessary

If you have any questions about image processing using AI, or if you would like to know how to generate more value with your images or videos, feel free to contact us at info@aiso-lab.com.

Do you want to explore your passion for data? Then join us at the Data Festival 2019! Taking place from 20 to 21 March at the Muffatwerk in Munich, this Festival aims at bringing together the community of users and data experts! The focus is on topics such as Data Science & Machine Learning, Data Engineering & Architecture, Data Visualization & Analytics.

Do you want to explore your passion for data? Then join us at the Data Festival 2019! Taking place from 20 to 21 March at the Muffatwerk in Munich, this Festival aims at bringing together the community of users and data experts! The focus is on topics such as Data Science & Machine Learning, Data Engineering & Architecture, Data Visualization & Analytics.

Also, there will be a day of an exciting pre-conference workshop on March 19 where you will have the chance to obtain a first impression of this fruitful event while enhancing your network!

Days:

19.03.2019 – Pre-Workshop-Day

20.03.2019 – 21.03.2019 – Conference

Location:

Pre-Workshop-Day – Sofitel Hotel in Munich

Conference – Muffatwerk

We are offering you the exclusive opportunity to visit the event (20 and 21 of March) at a reduced price of 50%. Click here to purchase your ticket and use the coupon code: SpeakerInvitAtion50%Bienert under the coupon code field. Hurry up! This offer is valid only for three people.

See you there!

Dear aiso-lab friends, customers, and partners!

Since November 1st, 2018, our offices can be found in a new beautiful location at the Kölner-Heumarkt. Our new address is ‘Gürzenichstrasse 27, 50667 in Cologne‘ on the 4th floor. Here we have a bird’s eye view over the Heumarkt; the Deutzer Brücke and the Rhine while we are enjoying a great atmosphere at any time!

Visit us for a cup of coffee or a glass of gluhwein and have joyful moments at the Christmas Market. Be part of our journey into the world of Artificial Intelligence and get inspired by new digital approaches and innovative ideas!

Visit us for a cup of coffee or a glass of gluhwein and have joyful moments at the Christmas Market. Be part of our journey into the world of Artificial Intelligence and get inspired by new digital approaches and innovative ideas!

Please make a note of the following date:

- KI & Karneval on Thursday, 28 February 2019, from 16:00 with impulse interest on Artificial Intelligence

- Carnival finale in the Gürzenich-Festhalle from 19:00 at the FEST IN BLAU of Kölner Funken Artillerie blue-white since 1870 e.V.

More details on the agenda are to be announced soon. We are looking forward to early registration for better planning of our events.

Visit us at any time in the new business premises and to our events.

We are looking forward to welcoming you!

Your aiso-lab team

On 13 and 14 November, the VDE became the capital for future technologies, on its 125th anniversary!

At the VDE Tec Summit 2018, almost 190 speakers presented and discussed visionary digital ideas. Among them our CEO and President of KI-Verband Jörg Bienert who showed the potential of AI, expectations and how our services can contribute to the market needs.

Also, he emphasized the role of KI Verband and its essential role in supporting Germany in catching up with new technologies.

The highlight: the anniversary evening with keynote speeches on technology policy by Federal Economics Minister Peter Altmaier and Siemens CEO Joe Kaeser and the big birthday party - including a trip into space to the international space station ISS.

Thank you Tecsummit for this exciting journey!

Immediately after the federal government had presented its AI strategy last week, our CEO and President of the German AI Association was giving an interview at the daily evening news on Tagesschau.de. During this interview, also the products of RAYPACK.AI GmbH were shown, emphasizing a range object and person recognition.

The AI strategy is a very comprehensive document with 77 individual measures. However, since concrete implementation plans including associated budget are mainly lacking, a short-term concretization is necessary. "The strategy paper is a good starting point for an intensive process, which must now be started immediately with all participants. Otherwise, this is just a nice collection of ideas."

Click here for the interview and contact us at info@aiso-lab.de for more information!

Have you ever wanted to make objects disappear from an image?

Source: http://deepangel.media.mit.edu

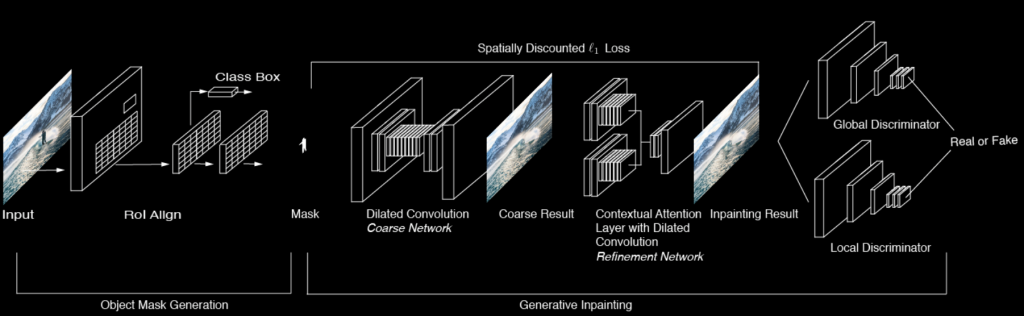

Now, DeepAngel can make this happen. Developed by researches at MIT, DeepAngel is an Artificial Intelligence that erases objects from photographs by using neural network. This algorithm uses Detectron from Facebook AI Research for object recognition and DeepFill for impainting using a neural network. This means that objects to be removed are detected by Detectron and painted over by DeepFill in such a way that they can hardly be distinguished from the background.

Source: http://deepangel.media.mit.edu

If you have any questions about image processing using AI or would like to know how you can add value to your images or videos, please contact us at info@aiso-lab.com.

We are delighted to invite you to KINNOVATE organized by Euroforum and taking place on 11 and 12 December 2018, in Munich. This event is focusing on the implementation of Artificial Intelligence solutions in business practice. You will get an overview of current applications and find partners for pilot projects and concrete business models.

We are delighted to invite you to KINNOVATE organized by Euroforum and taking place on 11 and 12 December 2018, in Munich. This event is focusing on the implementation of Artificial Intelligence solutions in business practice. You will get an overview of current applications and find partners for pilot projects and concrete business models.

We are looking forward to presenting our innovative use cases and highly-scalable AI software solutions such as Visual Computing, which among others, is our primary expertise.

We want to contribute to your participation. Therefore we have a special prize for you! Subscribe now to our Newsletter and get the exclusive opportunity to visit the event at a reduced price which amounts up to -600€. Click here to subscribe and stay tuned for our next Newsletter!

Further information about the event can be found here.

Today and in the next two days, the Medientage will take place in Munich. We are pleased to present our services for AI-based automatic indexing of videos and archive material.

You will find us at the booth of Deutsche Telekom. We look forward to your visit!

https://www.t-systems.com/de/de/ueber-uns/unternehmen/messen-events/messe-detail/medientage-muenchen-2018-826952